Artificial intelligence (AI) is rapidly transforming our world, from how we shop to how we get diagnosed by a doctor. But with this incredible power comes a hidden danger: bias. Just like any human creation, AI systems can inherit and amplify the biases present in the data they’re trained on.

So, what exactly is bias in AI? It happens when the algorithms powering these systems learn patterns from data that reflects real-world prejudices. Here’s how it can play out:

- Algorithmic Bias: Imagine an AI system used for loan approvals. If the training data primarily consisted of loans approved for white applicants with high credit scores, the system might unconsciously discriminate against applicants of color or those with lower credit scores.

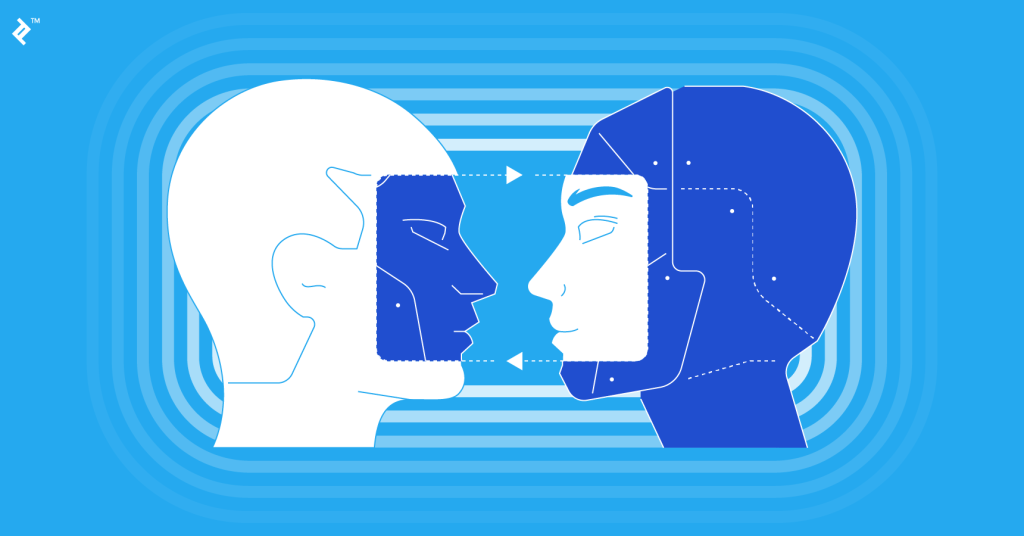

- Data Bias: Let’s say an AI facial recognition system is trained on a dataset with mostly Caucasian faces. This could lead to the system having difficulty accurately identifying faces of people with darker skin tones.

The consequences of bias in AI can be far-reaching. It can perpetuate discrimination in areas like hiring, loan approvals, and even criminal justice. For example, AI-powered risk assessment tools used in criminal justice have been shown to disproportionately flag people of color as high-risk.

So, what can be done?

- Diverse Datasets: Training AI systems on data that reflects the real world’s diversity is crucial. This means including people of different races, genders, and backgrounds.

- Human Oversight: AI should be a tool to augment human decision-making, not replace it. Humans can identify and correct for potential bias in the system’s outputs.

- Transparency and Explainability: Developers need to create AI systems that are transparent in how they reach decisions. This allows for identifying and mitigating bias.

Addressing bias in AI is an ongoing challenge. But by being aware of the issue and taking steps to mitigate it, we can ensure that AI is a force for good that benefits everyone.

Let’s keep the conversation going! Share your thoughts on AI bias in the comments below.

Related: What happened to Google’s Gemini image creation tool?